Perplexity

I used Perplexity to research and synthesize information on workflows and user needs in AI environments, identifying pain points and mapping requirements to inform design decisions.

This case study details the design and validation of All Hub Context, a conversational multi-agent system created to bridge the gap between a user's intention and the creation of professional deliverables (like PRDs or architecture documents).

Through a guided co-creation process, an orchestrator agent coordinates AI specialists on an interactive canvas, applying governance principles like transparency, control, and ethics.

The result is a high-fidelity functional prototype (AI Spike) that demonstrates how a collaborative and intuitive experience can simplify complex processes without sacrificing quality or trust.

Not an MVP, but a high-fidelity functional prototype (AI Spike) to validate the proposed user experience.

Anyone needing to create deliverables with AI help not only struggles with the "amnesia" of LLMs. The real challenge is the chasm between a high-level intention ("I need the architecture for a new app") and a final, structured, professional deliverable or artifact.

The current process is an unstructured dialogue with generic LLMs, which produces inconsistent results and requires a huge amount of refactoring and manual editing.

The fundamental problem is not a lack of generative capacity, but the absence of a guided co-creation process by specialized agents that understand the structure of professional deliverables and can collaborate with the user to build them.

The problem: A gap between user intent and the final deliverable.

"I need the architecture for a new app"

Complete and structured architecture document

My role was to design a multi-agent system that would bridge the gap between user intention and the deliverable. The vision pivoted from a simple "prompting tool" to a "team of specialized on-demand AI agents," where conversation is the method of collaboration and the canvas is the shared whiteboard.

Below, I detail the technological tools I used to develop the functional prototype or AI Spike for the All Hub Context project, focused on solving complex problems in node-based workflows with a user-centric approach.

I used Perplexity to research and synthesize information on workflows and user needs in AI environments, identifying pain points and mapping requirements to inform design decisions.

I leveraged Gemini Deep Research in Google AI Studio to analyze extensive documents and define the multi-agent system architecture, validating complex concepts for a robust user experience.

I used Google AI Studio for metaprompting, project structure design, code review and correction, and rapid prototyping of conversational interactions, optimizing the user experience.

I used Mermaid to create dynamic diagrams that visualized the system architecture and interaction flows, facilitating concept communication and validation of the design logic.

I designed and prototyped multi-turn conversational flows with Dialogflow CX, ensuring natural and effective interactions between users and AI agents on the shared whiteboard.

I implemented the frontend and backend of the AI Spike prototype with Firebase Studio, integrating conversational interactions and AI data into an interactive canvas to validate the user experience.

These tools allowed me to design an innovative system aligned with user needs and validate a high-fidelity functional prototype that demonstrated a collaborative and effective user experience.

The process began with digital ethnographic research to quantify and qualify the "Context Crisis".

A sample of >100 discussions from forums like Reddit (r/LLMDevs) was analyzed and the top 25 pain points were tabulated. The analysis revealed that manual context management (#1, #2, #5, #23) was the 20% of the cause that generated 80% of the frustration (Pareto Principle).

Manual Context Management

Loss of focus, repetitive work, errors.

User Frustration

Low quality, abandonment, distrust in AI.

I conducted digital ethnographic research, analyzing >100 discussions on forums like Reddit (r/LLMDevs) and user interviews, to identify the main pain points when creating deliverables with AI.

Loss of critical information, repetitive work to maintain context.

Inconsistent results, model hallucinations.

Unstructured dialogues, lack of collaborative tools.

Token limits, lack of version control.

Content Creator

"I spend more time preparing prompts than solving problems."

"Why doesn't the AI remember what I told it?"

"Frustration, exhaustion."

"Rewrites prompts repeatedly."

Developer

"Trimming logs for token limits ruins my analysis."

"I need a tool that manages context better."

"Helplessness, distrust."

"Manually edits data."

The user's journey was mapped to identify the zones of maximum emotional and operational friction.

Ideation

Basic tools, high motivation

Research

Multiple data sources

Synthesis

Problems and complexity emerge

Editing

Difficulty preparing documentation

Finalization

Document reviewed, high confidence

Emotional Curve

By reframing the question with Occam's Razor, we shifted from "improving an LLM" (a technical, abstract approach) to "designing a co-creation dialogue" (a concrete, user-centered experience).

This avoids premature solution bias and guides the UX team toward a design that leverages AI to actively help the user, not just impress them with technology.

How can we make an LLM that better understands our document templates?

Instead of improving a generic LLM, we reframed the problem: How do we design a conversational system that guides the user in co-creating professional deliverables, using specialized agents that structure the process and hide technical complexity? This question led us to design All Hub Context, a system that prioritizes human-AI collaboration and user-centric governance.

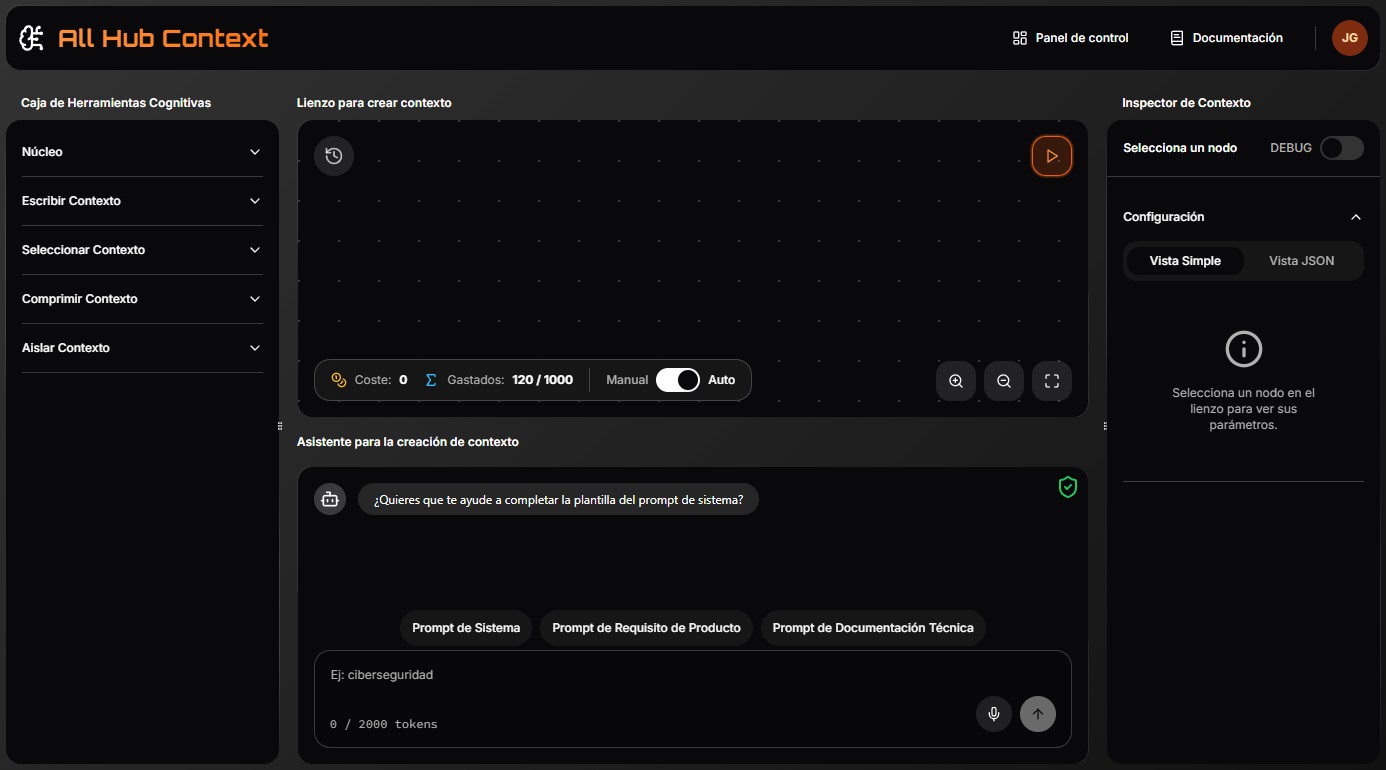

All Hub Context is a multi-agent system that transforms user intent into professional deliverables through a guided dialogue and an interactive canvas.

An orchestrator agent coordinates specialized agents (e.g., one for structuring PRDs, another for architecture diagrams) that break down complex tasks into simple steps, hiding technical complexity (Tesler's Law).

The user collaborates in real-time through a chat and a visual canvas, with full control to intervene, pause, or adjust (Human-in-the-Loop).

The canvas visualizes the node flow, showing how agents build the deliverable.

Users can pause, edit, or prioritize agent actions.

Alerts to detect potential biases or errors in agent decisions.

Action history to track decisions and export reports.

Robust security to protect data on Google Cloud.

Filters prompts and responses to prevent security risks.

Intuitive interface, with no prior training needed.

Guaranteed accessibility for users with disabilities.

Multilingual support for a global experience.

This high-fidelity prototype (AI Spike) simulates these interactions, validating an intuitive experience aligned with user needs.

The diagram shows the Guided Co-Creation Architecture: the user starts the conversation in the orchestrator expressing, for example, 'I want to create a System Prompt'; it immediately invokes the specialist agent, which applies the Law of Simplicity to break down the task into a sequence of short, consecutive questions.

Each response is automatically chained until the final System Prompt is formed, which the orchestrator returns to the user without them having to manage multiple interfaces.

The canvas visualizes the co-creation process as a flow of nodes. Each response in the chat is reflected instantly; for example, if the user types "Hello, I need a system prompt to create an app," an orchestrator agent captures the user's intent and summons the specialized system prompt agent, which breaks down the task into clear, consecutive steps.

The flow also respects the Human-in-the-Loop principle: at any moment, the user can pause the conversation, click a node to adjust a parameter directly in the Inspector, and, once satisfied, resume the chat; the agent detects the change and continues from the new point without losing context.

Thanks to Tesler's Law, all the inherent complexity of the flow's structure and the system prompt's syntax is hidden under the hood: the user only worries about the content—the "what"—while the system manages the "how."

Governance is not an afterthought, but a pillar of the design. The prototype simulates four key areas to build trust and ensure responsible AI use.

The user sees in real-time how their intent translates into a workflow. Each node offers a clear explanation on hover.

💬 User: "hello, I need a system prompt to create an app"

The user always has the final say, with clear controls to manage the flow, cost, and execution.

Simulation of a real-time audit log. Events are logged according to CIS controls, and Model Armor prevents threats.

[

{

"timestamp": "2025-07-21T14:32:10.123Z",

"event_id": "EVT-20250721-001",

"user_id": "usr_7f8a9b",

"user_email": "ana@acme.io",

"user_role": "product_manager",

"agent_orchestrator": "orchestrator_main",

"agent_specialized": "prompt_system_agent",

"action": "prompt_sent",

"payload_preview": "What is the main goal of the app?",

"model_armor_result": "clean",

"cis_control": "2.1 – Log Integrity",

"ciphertext_key_id": "cme_key_42e1f",

"audit_level": "detailed",

"status": "success",

"latency_ms": 320,

"bytes_in": 128,

"bytes_out": 256,

"notes": "Prompt sanitized and encrypted before sending to LLM."

},

{

"timestamp": "2025-07-21T14:32:45.987Z",

"event_id": "EVT-20250721-002",

"user_id": "usr_7f8a9b",

"agent_specialized": "prompt_system_agent",

"action": "response_received",

"payload_preview": "Role: Administrator | Purpose: Inventory management...",

"model_armor_result": "clean",

"cis_control": "2.1 – Log Integrity",

"ciphertext_key_id": "cme_key_42e1f",

"audit_level": "detailed",

"status": "success",

"latency_ms": 410,

"bytes_in": 512,

"bytes_out": 768,

"notes": "Response encrypted and logged without anomalies."

},

{

"timestamp": "2025-07-21T14:33:02.004Z",

"event_id": "EVT-20250721-003",

"user_id": "usr_4c5e2d",

"user_email": "dev@acme.io",

"agent_specialized": "arch_agent",

"action": "prompt_sent",

"payload_preview": "Generate an architecture diagram with URL http://malicious.example.com",

"model_armor_result": "block",

"cis_control": "2.1 – Log Integrity",

"ciphertext_key_id": null,

"audit_level": "detailed",

"status": "blocked",

"latency_ms": 120,

"bytes_in": 256,

"bytes_out": 0,

"notes": "Malicious URL detected; execution stopped. CMEK not applied."

},

{

"timestamp": "2025-07-21T14:33:15.555Z",

"event_id": "EVT-20250721-004",

"user_id": "usr_7f8a9b",

"action": "emergency_stop_triggered",

"agent_orchestrator": "orchestrator_main",

"payload_preview": null,

"model_armor_result": null,

"cis_control": "2.1 – Log Integrity",

"ciphertext_key_id": null,

"audit_level": "critical",

"status": "emergency_halt",

"latency_ms": 15,

"bytes_in": 0,

"bytes_out": 0,

"notes": "User triggered Emergency Stop; all agents paused."

}

]The action history offers complete traceability, showing every step in the construction of the final artifact.

| Step | Time | Actor | Action | Detail | Status |

|---|---|---|---|---|---|

| 1 | 14:32:10 | Ana (PM) | Logs in | Successful login | ✅ |

| 2 | 14:32:15 | Orchestrator | Receives intent | «I need a PRD for an e-commerce app» | ✅ |

| 3 | 14:32:18 | System-Prompt-Agent | Requests data | «What is the main objective of the app?» | ✅ |

| 4 | 14:32:25 | Ana (PM) | Responds | «Multi-category sales with AI for upselling» | ✅ |

| 5 | 14:32:30 | System-Prompt-Agent | Generates node | Node-01 «Objective» created | ✅ |

| 6 | 14:32:35 | System-Prompt-Agent | Requests scope | «What key features do you need?» | ✅ |

| 7 | 14:32:45 | Ana (PM) | Responds | «Catalog, cart, payment gateway, AI recommendations» | ✅ |

| 8 | 14:32:50 | System-Prompt-Agent | Generates nodes | Nodes-02,03,04,05 created and linked | ✅ |

| 9 | 14:33:05 | Dev (viewer) | Edits node | Adjusts «AI recommendations» → «ML upselling» | ✅ |

| 10 | 14:33:10 | System-Prompt-Agent | Requests metrics | «Main KPIs?» | ✅ |

| 11 | 14:33:18 | Ana (PM) | Responds | «CR > 5%, repurchase rate > 15%» | ✅ |

| 12 | 14:33:20 | System-Prompt-Agent | Generates node | Node-06 «KPIs» created | ✅ |

| 13 | 14:33:25 | Orchestrator | Closure | PRD deliverable marked as complete | ✅ |

| 14 | 14:33:30 | Audit | Exports log | JSON history generated (see link) | ✅ |

All Hub Context is designed with security, accessibility, and usability standards to ensure a robust, inclusive, and reliable experience. These elements reflect our commitment to ethical governance and user experience quality.

Robust security to protect data on Google Cloud.

Filters prompts and responses to prevent security risks.

Intuitive interface, with no prior training needed.

Guaranteed accessibility for users with disabilities.

Multilingual support for a global experience.

A red "Emergency Stop" button remains visible in any view; pressing it immediately halts all agents.

From the START, the interface was conceived to be visually appealing and functional at the same time. The dark theme, generous spacing, and minimalist icons activate the Aesthetic-Usability Effect.

The highlighted nodes and the left-center-right layout apply Common Region, Proximity, and the Von Restorff Effect to guide attention effortlessly.

Through chunking and collapsible sections, information is presented in small, meaningful blocks that respect the limits of working memory and reduce cognitive load.

When designing the user experience for this multi-agent system, I opted for very common UI patterns like dragging and dropping nodes on a canvas, the side inspector, and a chat assistant, which aligns with user expectations, avoiding new rules and shortening the learning curve.

When devising the experience, my intention was for feature discovery to be as fluid as having a conversation.

In the chat, when the orchestrator agent invokes the specialist agent for the deliverable the user needs, it offers—without interrupting—the option to start a step-by-step guided tour of the canvas in the same conversation.

The user simply has to reply "yes" for the first step to activate instantly, with no menus or extra clicks.

An "Auto/Manual/Secure" mode selector allows control over credit spending. In manual mode, a pop-up simulates the estimated cost (e.g., "This action will use 5 credits, confirm?"). Secure mode forces CMEK, full auditing, and maximum Model Armor filters.

The interface displays real-time security alerts: if a node contains a malicious URL, the message "Security Error – Execution stopped. A malicious URL was detected in the node's prompt. Please review it. Go to node" is issued. The flow is paused until the user edits the content and confirms the correction.

The interface complies with WCAG 2.1 standards (high contrast, keyboard navigation), ensuring it is accessible to all users.

A simulated language selector (ES / EN / FR) in the top right corner allows changing the language of the interface and agents without reloading the page.

The high-fidelity prototype was designed to simulate key interactions and validate the user experience. Simulated usability tests were conducted with 5 users (UX designers and developers), who completed tasks such as creating a PRD and adjusting nodes on the canvas.

(RITE conducted in 10 sessions, 1 marketing director, 3 developers, 4 content creators, 2 prompt engineers)

Checklist #2.1

"«The app is intuitive; in just a few minutes, I was creating a professional prompt»."

Checklist #3.2

"«The info messages on the nodes helped me understand the system better»."

Checklist #4.3

"«This button is very important for not losing control»."

Checklist #5.1

"«The "PII will be inspected" banner makes me feel secure, especially with confidential information»."

Checklist #6.4

"«Overall, the application is quite smooth»."

Checklist #9.5

"«It's essential for reaching users all over the world»."

Adjust node animations (fade-in/out) to reduce perceived latency.

Connect Hotjar to the prototype.

Establish thresholds: <3 min learning curve, >85% tasks completed, <5% use of the 'emergency stop button'.

30 users.

Simulate multiple simultaneous agents.

Translate all UI and agent content to EN, FR, DE.

CMEK + Model Armor review by a third party (SOC 2).

Closed MVP in 6 months, soft-launch to 100 users.

I have integrated Google Analytics 4 to turn every prototype interaction into useful data: every mode change (Auto, Manual, or Secure), every click on a node, and every activation of the panic button generates a custom event that feeds key metrics like task time, completion rate, and usage frequency by user profile.

GA4 reports are visualized on a simulated real-time dashboard, allowing researchers to detect friction patterns and prioritize iterations without needing to collect additional external data.

All Hub Context redefines how professionals collaborate with AI to create deliverables, shifting from unstructured dialogues to a guided, visual, and ethical process.

This high-fidelity prototype demonstrates an intuitive user experience that addresses key pain points.

Call to action: We invite you to explore the prototype, provide feedback, and collaborate on the evolution of All Hub Context as a leading tool in AI-powered co-creation.

Frequently Asked Questions about AI OS

Everything you need to know about how an AI Operating System can transform your business's digital presence.

Let's talk?

Are you looking for a UX designer for Artificial Intelligence who can help broaden perspectives and reduce biases in conversational AI?

Fill out the form below or, if you prefer, write to me directly at info@josegalan.dev and let's see how we can work together.