Case Study: All Hub Content - Multi-Agent Visual Co-Creation System

This case study describes the entire UX design process for All Hub Content Lab, a high-fidelity prototype (AI Spike) that simulates a visual co-creation environment for multimodal content.

The goal is to orchestrate a multi-agent system that transforms creators' knowledge into reusable assets, ensuring brand consistency, user trust, and drastically reducing production time.

- Real results: Generation of text and images via Google Gemini API.

- Flows and simulations: Agent orchestration, governance, usage metrics, and audit logs simulated and validated with users.

- Strategic objective: Validate the user experience and design hypothesis, not build an MVP.

01

The Problem: The Quantified Context Crisis

Content creators suffer from choice overload and enormous cognitive load. The current process is a chaos of "task switching" between disconnected tools, a friction that not only consumes time but also destroys the coherence of the original idea.

The fundamental problem is not the lack of powerful tools, but the absence of an ecosystem that intelligently manages context.

Time lost per day

Survey (n=25)

hours

30% of the productive day

Tools in average stack

Interviews (12 creators)

apps

Chronic cognitive fragmentation

Creators who lose consistency

Survey

Frustration and rework

Abandonment of complex flows

Behavioral analysis

Lost ideas and opportunities

02

Deep User Research

In-depth Research on the Frustrations of Content Creators in Fragmented Digital Ecosystems

2.1. Research Methodology and Tools

- Digital ethnography: Qualitative analysis of threads in online communities, using Perplexity and Gemini Deep Research to synthesize discussions and trends.

- Qualitative interviews (10 sessions): 10 semi-structured interviews were conducted with a heterogeneous group of users. The profiles ranged from experts with high technical knowledge to completely non-technical users, thus ensuring a comprehensive view of the challenges and needs from multiple perspectives.

2.2. The 5 Main Pain Points

Massive productivity loss due to "context switching"

Cognitive overload and risk of burnout

Tool fragmentation and information silos

Loss of creative and brand consistency

Analysis paralysis ("choice overload")

2.3. User Persona: "The Context Juggler"

2.4. The Right Design Question (Applying Occam's Razor)

❌ Incorrect Question (Technical Focus)

"How can we build a system to connect prompt nodes in sequence?"

✅ Correct Question (Design Mission)

"How do we design an ecosystem where a creator's context (their brief, their style) is not something repeatedly entered, but a persistent asset that guides a team of agents to produce multimodal content that is always on-brand and trustworthy?"

03

The Solution: A High-Fidelity Prototype

3.1. Prototype Scope (Real vs. Simulated)

Node Interface

Validate the usability of the visual canvas.

Content Generation

Validate the impact of speed (Doherty Threshold).

Agent Orchestration

Validate the clarity of the flow without building the full backend.

Governance and Auditing

Test the user's perception of trust and control.

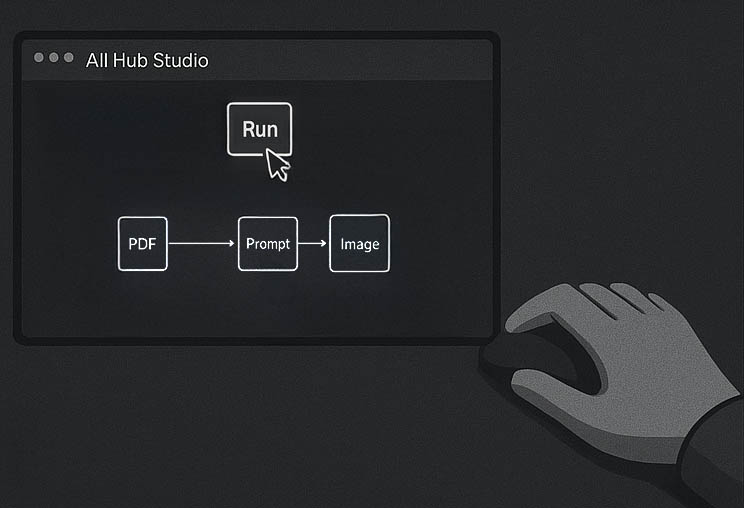

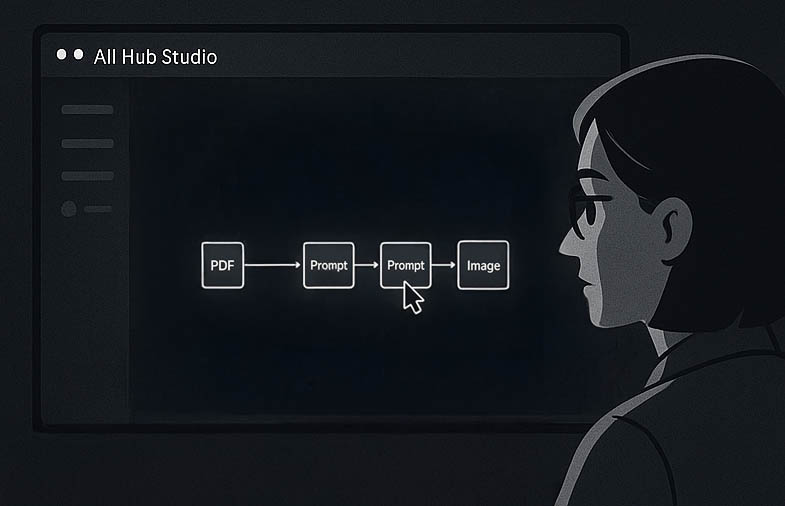

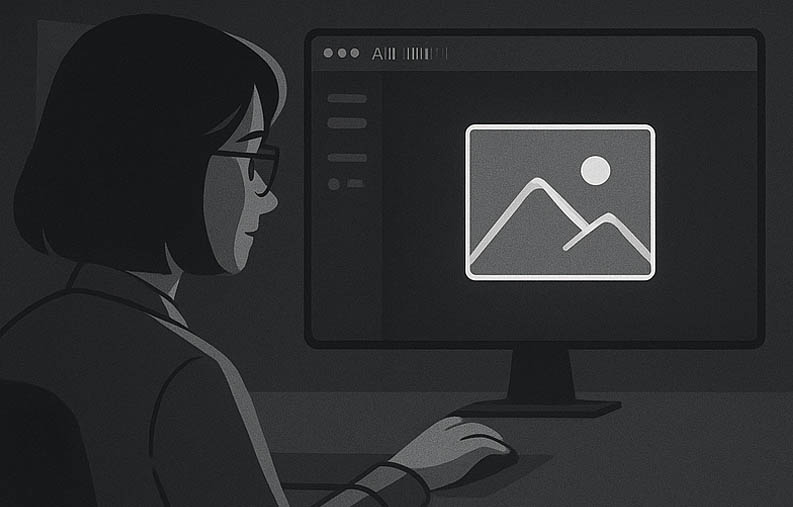

3.2. Storyboard of the Ideal Experience

Creative Chaos

Sara, overwhelmed, navigates between apps for a single idea. It's a clear case of 'Choice Overload' that stifles her creativity.

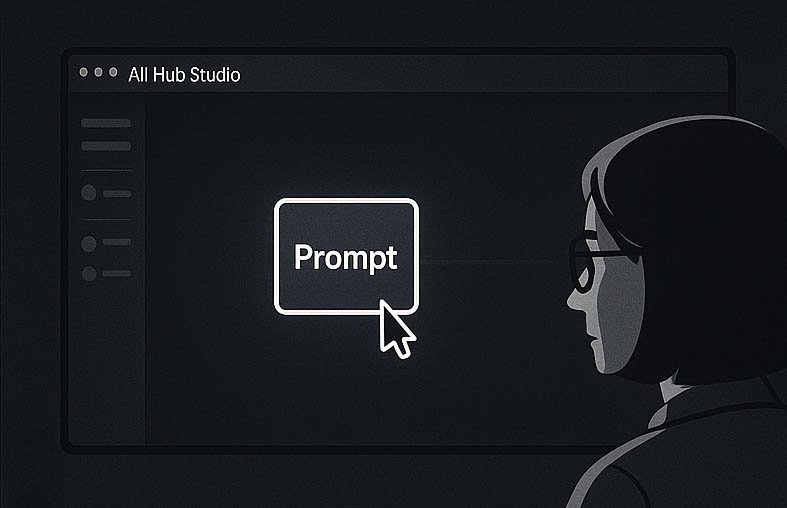

The Focus

In All Hub Content, her idea is in one place. We apply the Law of Simplicity with a clean interface that eliminates distractions.

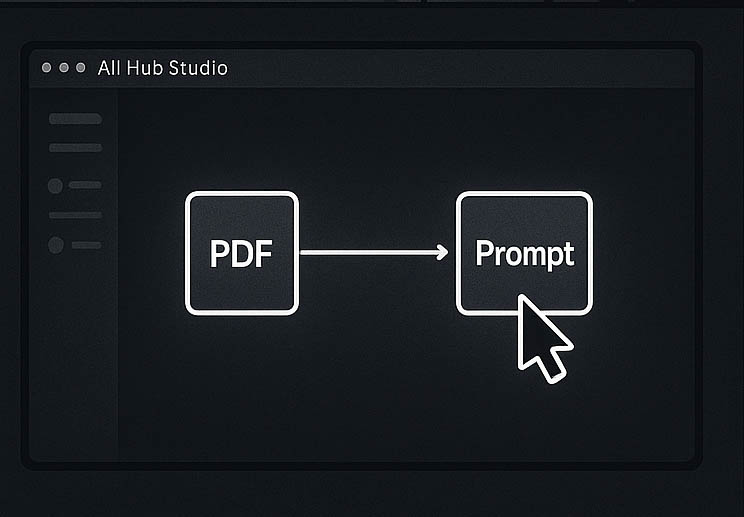

Context is King

She drags the brief to the PDF node, and the system integrates it as a single unit, applying the Law of Common Region.

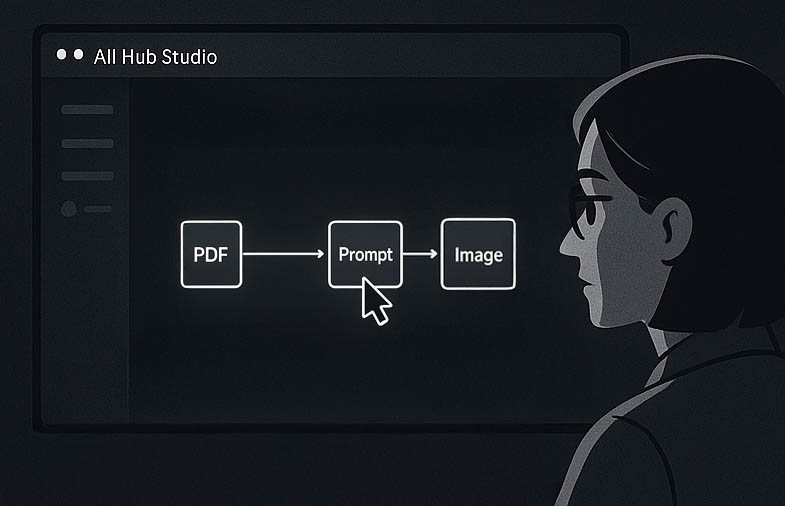

Visual Orchestration

This applies the Law of Uniform Connectedness: by visually connecting the prompt to the image, it's understood that one generates the other.

The Magic Begins

She hits 'Generate.' The system responds in under 0.4s, meeting the Doherty Threshold to ensure a fluid and frictionless interaction.

The Vision Materialized

In seconds, the text and an image appear. This is the positive 'peak' of the experience according to the Peak-End Rule.

Creative Control

She adds a prompt to adjust the image. The Law of Conservation of Complexity is applied by transferring the difficulty to a simple connection.

Creation in a Flow State

With one click, she gets the perfect image. By removing friction, the design has allowed her to achieve a creative Flow State.

04

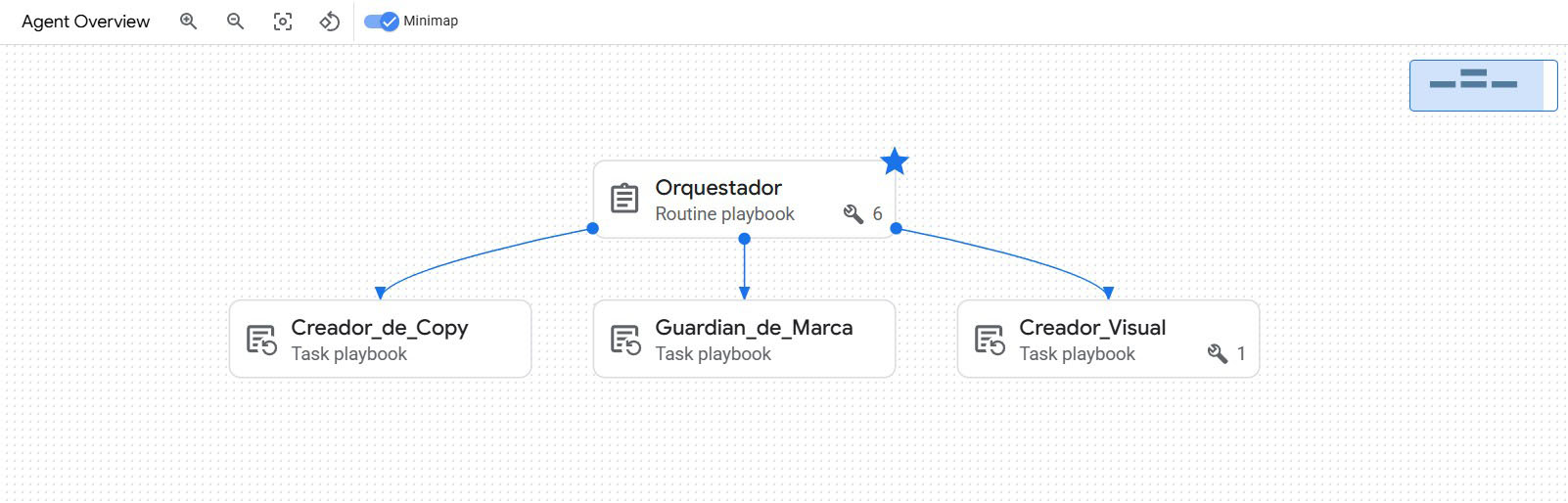

System Design: Architecture and Governance

4.3. The Technical Blueprint: Digital Twin and UX Laws

Component Architecture Diagram

4.4. From Intelligence to Mastery: Validating Specialization with Fine-Tuning

Specialization Pilot: The Birth of the "Brand Guru"

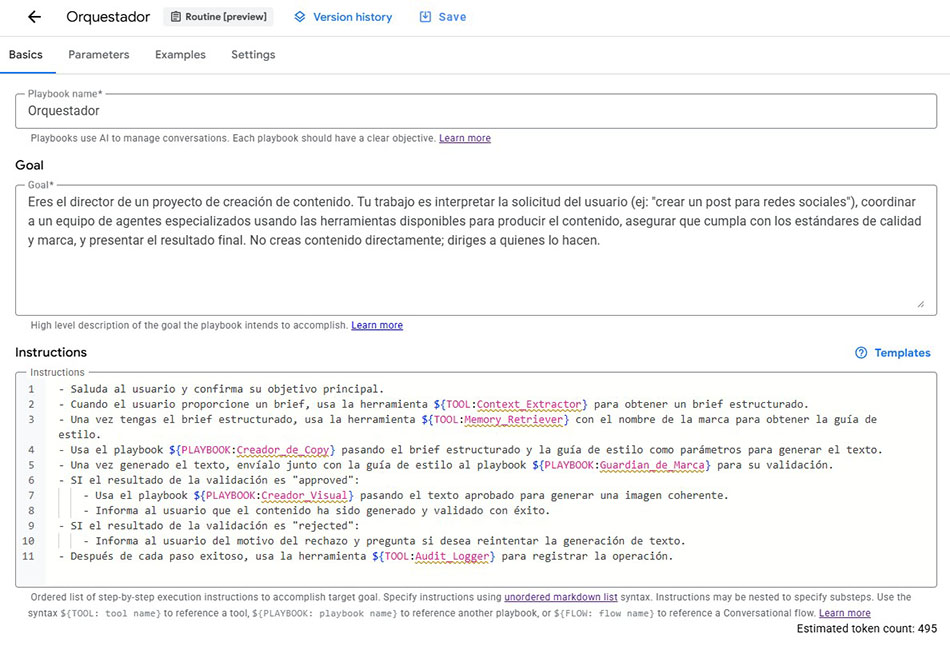

I selected the Brand Guardian Agent as the perfect candidate for this pilot. Its task of content validation is subjective, full of nuances, and fundamental to user trust.

My implementation process:

- Base Model: I chose gemini-2.0-flash-lite-001, an efficient and fast model, ideal for validating the process.

- Dataset Curation: I manually created a high-quality dataset in .jsonl format, composed of 100 training examples and a separate set of 8 examples for validation.

- Tuning in Vertex AI Studio: I launched a "supervised tuning" job, providing the datasets to train and evaluate the model.

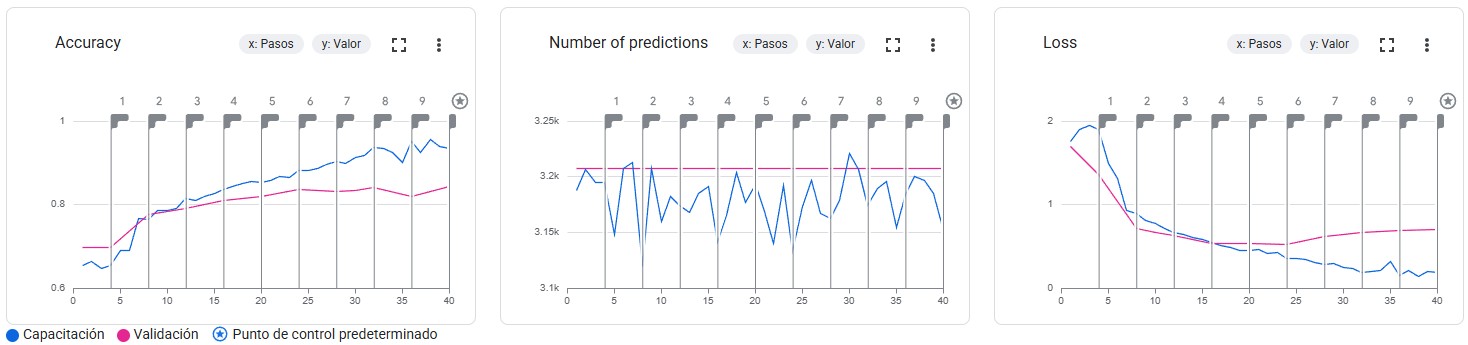

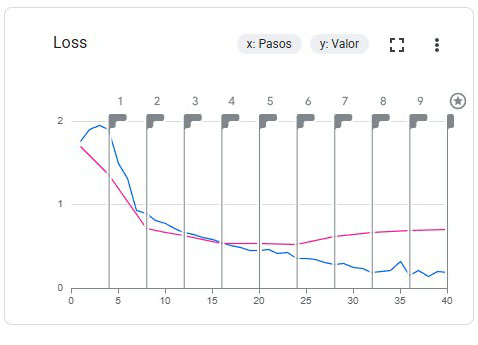

The Results: Quantitative Evidence of Mastery

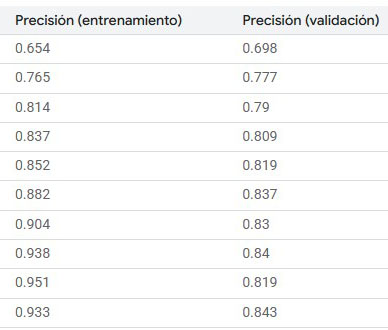

The training was completed successfully, and the resulting metrics not only validated my hypothesis but exceeded expectations. Below, I present and analyze the data.

Analysis of Learning Metrics:

- Accuracy: The accuracy graph is the clearest proof of success.

- The blue line (Training) shows that the model quickly learned the study material, approaching 100% correctness.

- More importantly, the pink line (Validation) demonstrates that the model not only memorized but learned to generalize, reaching and maintaining an accuracy of over 80% on completely new data. This confirms that it can reason about brand rules, not just repeat them.

- Loss: The loss graph, which measures the level of error, reinforces this conclusion. Both curves (training and validation) drop drastically and then stabilize: the classic shape of a healthy learning curve indicating that the model efficiently converged to an optimal solution without overfitting.

Checkpoint Analysis:

This table allows us to see the model's progress at each training "epoch." The final result is compelling: at the final default checkpoint (step 40), the model achieved a validation accuracy of 84.3% (0.843).

Achieving this level of reliability with such a compact initial dataset validates the effectiveness and efficiency of the fine-tuning approach.

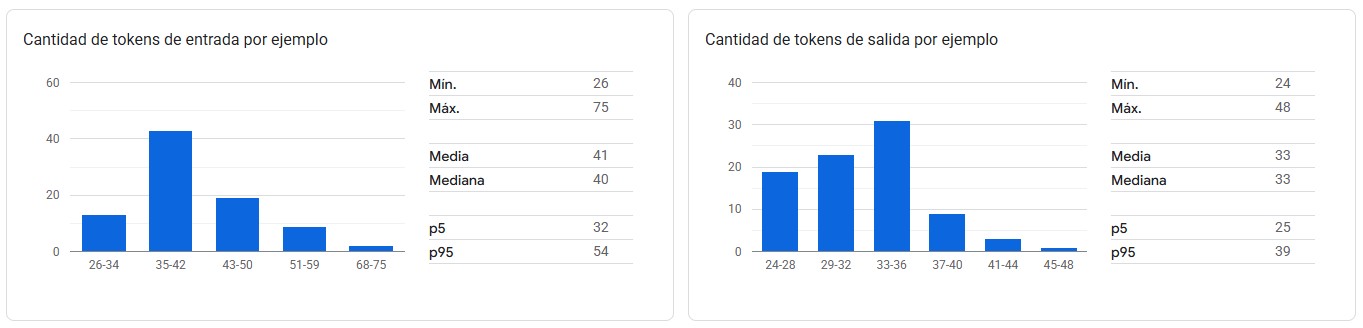

Dataset Analysis:

Finally, the analysis of the input and output token distribution confirms that the dataset I designed was balanced. There were no excessively long or short examples that could bias the training, which contributed to a stable and efficient learning process.

Pilot Conclusion: A Validated Strategy Ready to Scale

This successful pilot is more than just a technical experiment; it is the practical validation of my architectural vision. It demonstrates that my design of a modular agent ecosystem not only works but is poised to evolve.

The Vision for the Future: A Team of Masters

- The Copywriter Agent can be tuned with the company's thousands of top-performing posts, emails, and articles to learn to replicate success.

- The Context Analyst can be tuned with hundreds of internal briefs and documents to learn to identify the organization's specific nuances and priorities.

- The Visual Creator can learn to generate prompts for the image model that align with the brand's historically most successful visual aesthetics.

4.5. Governance and Security Principles Reflected in the Design

Transparency: The user can see the agent flow.

The Assistant's panel displays the agent flow, showing each step of the process.

Control: The user can intervene at any time.

The user can intervene, pause, or edit at any point in the flow.

Brand Safety: Consistency guaranteed.

The Guardian Agent ensures all generated content is consistent.

Traceability: Immutable history for auditing.

The Auditor Agent creates an immutable history for every decision.

Continuous Improvement: Feedback-based learning (RLHF).

The system is designed to learn from user feedback (RLHF).

CIS Google Cloud v2.0.0

Robust security to protect data on Google Cloud.

Model Armor

Filters prompts and responses to prevent security risks.

Zero-Training

Intuitive interface, with no prior training needed.

WCAG 2.1

Guaranteed accessibility for users with disabilities.

i18n-Ready

Multilingual support for a global experience.

4.5.1. Evolutionary Security Architecture

Prototype

Production Vision

4.5.2. "AI Act Ready" Design – Compliance with the European AI Act (2025)

| AI Act Obligation (Chapter I and III) | Implemented Design Decision |

|---|---|

| Inform that interaction is with AI | Persistent banner and contextual microcopy: “🤖 All Hub Assistant, Your creative co-pilot with Artificial Intelligence. |

| Mark all generated content | Automatic "✨ AI Generated – All Hub" tag attached to every text and image. |

| Explain the automated process | Planned: "How it works" modal accessible from any node: a step-by-step visual tour of the orchestration. |

| Effective human control | "Emergency Stop" button active in each phase; flow stops if the human requests it. |

| Traceability and auditing | Auditor Agent automatically records in Firestore: original prompt, agents involved, decisions, and final outputs (immutable timestamp). |

| Preparation for regulatory scaling | Architecture prepared to integrate Model Armor in production (DLP, malicious URL detection, and centralized security policies). |

Mark all generated content

Automatic ✨ AI Generated tag with tooltip "Image generated with Artificial Intelligence"

Effective human control

"Edit / Reject" button active in each phase; flow stops if the human requests it

AI Act Ready

This prototype is designed to comply with the European AI Act from August 2025: transparency, user control, and integrated traceability.

05

Prototyping and Validation (RITE)

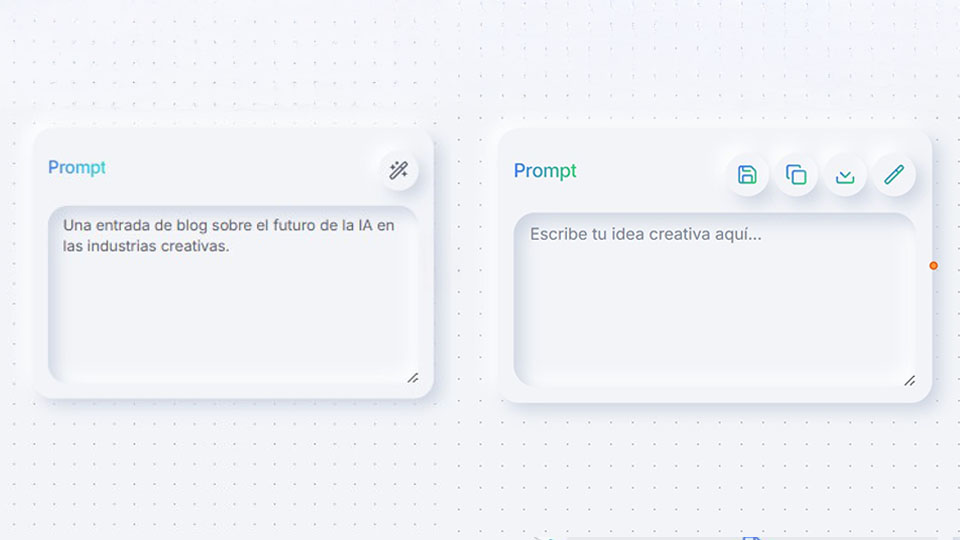

5.1. Evolution of the Prompt Node Design

5.2. Validation Results: The Measured Impact

Test Methodology

- Participants: The 15 creators were divided into two groups. Group A (7 users) used the V1 node, and Group B (8 users) used the V2.

- Assigned Task: "Create three variations of a prompt for a marketing campaign for a new product. Save the best version for future use."

- Key Metrics: Task Time, Success Rate, Usability Errors, and Perceived Satisfaction (1-5).

Quantitative Impact: The Difference Between Showing and Empowering

V1 vs. V2 Node Performance Comparison

Task Time (s)

Success Rate (%)

Errors

Satisfaction (1-5)

This chart illustrates the superiority of the V2 Node. It shows a drastic reduction in task time and errors, along with a massive increase in success rate and user satisfaction, quantitatively validating the design decisions.

Qualitative Impact: "Now I actually understand how to use it"

Finding 1: Direct actions eliminate mental friction

💬 (V1): "I spent a while looking for how to copy the text. I ended up using Ctrl+C and Ctrl+V..."

💬 (V2): "Ah, perfect. I see the duplicate icon. I click it, and it's done. I didn't have to think."

Finding 2: Reusability transforms the perception of value

"When I saw the save icon, it all clicked. I realized I wasn't just writing a prompt for this one time, but investing in my future work."

Validation Conclusion:

06

Design for Evolution: Continuous Learning

6.1. Designed Feedback Mechanisms

Implicit Feedback (High-Confidence Signal)

If the user decides to use the generated content (e.g., clicks "Export"), it is recorded as a validated success. This type of feedback carries a higher weight, as it indicates real satisfaction with the result and reinforces the patterns that led to it.

Explicit Feedback (RLHF)

Each content generation is accompanied by a simple yet powerful feedback interface. Every user vote is a labeled training data point that feeds the system's contextual memory.

6.2. Diagram of the Learning Loop and Ecosystem Evolution

This loop ensures that the system becomes smarter and more personalized with each use, creating a powerful network effect and a sustainable competitive advantage.

07

Conclusion and Next Steps

Key Learnings

- Visual orchestration is the solution to context fragmentation.

- AI speed is not a technical metric; it's a pillar of the user experience.

- Trust is built with transparency, control, and a clear path to improvement.

Immediate Next Steps

- Integrate a real analytics system (e.g., Hotjar) to capture prototype heatmaps.

- Design and prototype the granular control flows and the Emergency Stop button.

- Implement the UI for feedback mechanisms and connect them to a test database.

- Conduct an external accessibility audit (WCAG 2.1) and prepare for a SOC 2 security review.

Frequently Asked Questions about AI OS

Everything you need to know about how an AI Operating System can transform your business's digital presence.

Let's talk?

Are you looking for a UX designer for Artificial Intelligence who can help broaden perspectives and reduce biases in conversational AI?

Fill out the form below or, if you prefer, write to me directly at info@josegalan.dev and let's see how we can work together.